A crucial step in the planning of an optical systems is the camera selection. When chosen correctly, the camera will be a seamless module in the system that “simply” acquires images and transfers them to our processing unit. There is a very wide selection of cameras and sensors out there, manufactured by many great companies with lots and lots of wonderful features. In this post we will delve into what aspects of the camera we should take consideration before choosing a camera model for our system. Note that I will refer to the camera as a whole, without differentiating the sensor from the camera. If your application deals separately with the sensor and the camera that surrounds it this post probably won’t tell you anything you don’t already know ![]() .

.

When we take a close look at a camera’s specifications sheet, we see that it is never short. There are many parameters in the camera, all of which may eventually impact how well our optical system will perform. See the list below of the important ones.

One quick note about EMVA before we go into these parameters. EMVA has standards for specification, measurement and SW interface of machine vision sensors and cameras. You do not have to be an expert in EMVA standards in order to operate a camera in your application, but when it comes to a very important feature or parameter for your system it can be very helpful to understand how it is measured and defined and if the camera vendor is compliant with these standards.

Sensor Technology (Wavelengths & Quantum Efficiency)

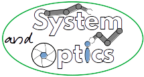

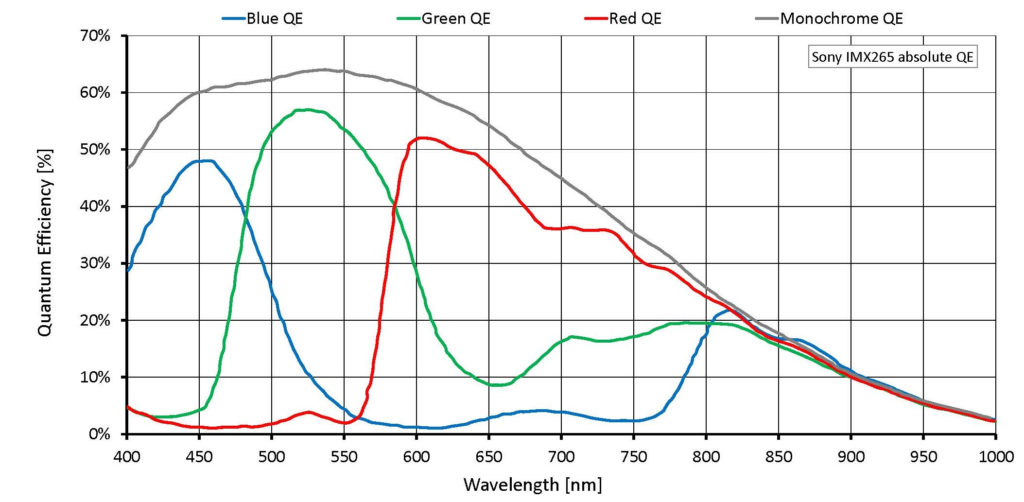

Know your wavelength range and light budget. VIS applications require different sensor technologies than IR or UV. A NIR application may use enhanced NIR sensor or an InGaAs sensor, but their quantum efficiency i.e. the sensitivity for the wavelength spectrum differs. If you have light starvation in a certain wavelength range, make sure that this range does not suffer from low sensitivity as well. You can see 2 examples of different quantum efficiency graphs for 2 different cameras for the VIS-NIR range, both integrated with different models of Sony sensors.

When we look at the monochrome (black) curve, if your light source is low on 400’s or terribly high on 800’s the sensor model selection may cross off one aspect in your selection.

Pixel Size, Pixel Resolution and Sensor Size

Well, this is one of the obvious ones that are never missed, so I will keep it short. If we have a FOV of 35mm and we need a resolution of 25um a 1024pix wide sensor will definitely not be enough. So again, without going into the calculations, make sure your pixel size covers your Nyquist frequency with spares, that you and you have enough pixels with that size to cover you FOV with your optics and whatever algo you are using. One more word about the pixel size and Nyquist frequency calculation is that you have to cover also the diagonal of the pixel and not only the pixel’s width and height, so as to allow also images that are not aligned with the camera XY to be fully dissolved. Note that the applied optics may shift a bit according to camera selection. The camera is an inseparable part of the optical system. A word of caution: too many pixels may cause bottlenecks down the line in FPS, data transfer, algo cycle time and of course unit price. Make sure you are not overloading your system with unnecessary data.

Area \ Line Scan

The sensor size and pixel resolution are always mentioned in same breath with the type of the sensor – whether it is a line scan or area scan. Line scan cameras are a few lines of pixels in the sensor so in practice you get only one line image of one axis. They usually enjoy a very high FPS (see below) but their ability to provide the user with a human readable image is dependent on the image processing that is done down the road. They are mainly used in applications like conveyor scanning, spectrometers and so on. There are different considerations on the list if a line scan camera is chosen or an area camera. Make sure which of the two is most suitable for your application before continuing.

Fill Factor and MTF

The camera sensor has its own MTF which is added on top of (or more precisely multiplied by) all the optics that point the light into the camera’s sensor MTF. One of the major factors for the sensor’s MTF is the sensor’s effective fill factor, which is a number in [%] that tells us how much of the pixel’s area is actively sensing light photons. To be honest, if your pixels size is significantly smaller than your optical resolution (i.e. you beat Nyquist frequency by a factor of >5 for example) you probably wouldn’t feel the impact of the sensor’s MTF. When we get closer to the pixel size upper limit that’s when the fill factor makes an impact.

Dynamic Range, bit Depth & Full Well Capacity

The bit depth is simply how many bits per pixel we receive (8bit, 10bit, 12bit etc., in color cameras it will be higher). The dynamic range of a camera is the difference between the lowest power per pixel and the highest power per pixel that the camera could detect, usually quoted in [dB]. The full well capacity of a pixel, quoted in [e-], is how many electrons the pixel can store until saturation i.e. it will output the highest number of the bit depth. The combination of these three specifications will give us what the sensitivity levels of the camera are. If you combine them with the quantum efficiency you can get the power level sensitivity of the camera per wavelength.

Why am I telling you all this? The dynamic range is a limitation when you have in your image high power density in some areas and low power density in other areas. If for example you have a laser spot aimed on your object simultaneously with light for imaging of the rest of the object you probably need a very high dynamic range (BTW, there are interesting camera technologies of logarithmic response instead of a linear response for that particular problem). Another example is when you have a sensitive image where gray levels of 1uW area that needs to be resolved over a 10mW average image, an 8bit depth, without going into the exact details of the calculation, will never be enough for your application. And last but not least on this subject, if your max expected power per pixel comes out 1mW and the saturation level in that wavelength is only 0.8mW per pixel you are bound to tackle this issue some other way, which you could have avoided in the first place.

(VIS) Color \ Monochrome

As part of the sensor technology, when dealing with the VIS wavelength range, we must know whether the color of the different objects in our image make a difference. Get to know what the algo tactics of the application is before deciding this particular point. For example, differentiation of sweet peppers according to colors is an obvious choice for a color camera, but detection of bird flock movement in an airfield is not necessarily such an obvious selection. Note that in color cameras there are inherent optical resolution penalties we pay on top of FPS and transfer time overheads that must be taken into consideration in the camera selection process.

Global Shutter \ Rolling Shutter

First, if you do not know the difference between Rolling shutter and a Global shutter, take a look here. Know your system and object environment. If it is a stationary object your system is probably indifferent to the shutter type, but in a moving object or in a fast-changing environment you will have to calculate very carefully the velocity of the image on the camera sensor and see what the slowest “stable time” difference between the top row and the bottom row to be taken that you may allow so that your image will not be smeared.

Effective FPS and Exposure Time

FPS = Frames per second. The exposure time is the time the camera collects light before is stops and moves to the next frame. FPS and exposure go hand in hand when one limits the other. You cannot have FPS of more than 20 with a 50ms exposure time. From the camera, exposure time is a function of the amount of light and camera sensitivity whereas from the application “stable time” allowance, hence also for the max FPS allowed. The FPS requirement for the application is dependent on the time resolution we need for it to function properly and the “stable time” impacts also the shutter type allowed for our application. You see in this particular example how many parameters of the camera selection interact and impact each-other and all have to be taken into account in the camera selection process.

A very important word of caution: the FPS of a camera, which the manufacturer writes in his brochure, usually refers to “free-run” mode where the camera starts grabbing the next frame once the current frame finished its exposure. In many cases, when a sync trigger is needed to start grabbing, the effective FPS is limited by other factors and may go down even to 50%. There is no rule of thumb here. If your application is sensitive to this issue make your inquiries with the camera vendor.

Connection & Electricity + Triggers

Here I’ll share an experience I once had: we found a lovely camera for our application only to find that it had a GigE connection and we did not have a spare Ethernet socket and finding a suitable PCI Ethernet card that could fit in our PC turned out to be a much more difficult task than finding a camera. The moral of this story is not to overlook the connectivity of the camera and neither the electricity inlet and nor if you have HW trigger needs for camera sync in\out make sure to have them on the list.

Cooling & Dark Noise

Some sensors need active cooling to operate properly and most sensors are sensitive to temperature even if they do not officially need cooling. A change in temperature effects the dark noise (electric signal when there is no light hitting the sensor) and may change the linearity of the camera’s active sensitivity range. If the camera requires it or if every photon counts in your application you have to take these parameters very seriously as also the peripherals of electrical and mechanical change if the camera requires active \ passive cooling.

Size and Mechanics

No application has unlimited space but sometimes, especially when the camera type is exotic, the camera defines the mechanics. If there is a mechanical limitation it must go into the considerations list. Sometimes the lack of space may be resolved in an un-packaged camera or board level camera. Keep an open mind for all kinds of mechanical solutions.

SW Interface

In most applications we have to integrate the camera into our SW. Different camera manufacturers have different SW interfaces that have different functions. For example, if there is no Python interface to control your camera and you have to implement a Python wrapper to all the camera control functions that would be reason enough to cross a certain camera off your list. Note that a lot of camera vendors have different board families where there will be two cameras with the same sensor integrated into them but with a different board. That different board usually means that features that are inherently controlled via the SW interface that exist in one board but do not exist in the other – again this is something that you would probably, if possible, have to implement yourself at the end of the road.

On-Board Processing

Nowadays more and more cameras have on-board processing units with many diverse features, from filtering up to neural network inference. It is up to you to decide what piece of HW will do the processing in the optimal way and accordingly verify that these features indeed could be applied in your chosen camera. Having said that, if you don’t use any of these fancy features do not select a high-end camera that possesses them – that would be BOM price down the drain.

Camera Price

It was bound to arrive eventually, wasn’t it? ![]() Everything comes with a price. Right from the outset, know your budget limits; this in itself will shorten your camera candidate list significantly. Know also how sensitive you are for the camera price – there are applications where the camera price makes up 90% of the BOM where here the sensitivity to its price is high and there are those that the camera cost is less than 2% of the BOM with no sensitivity to price at all.

Everything comes with a price. Right from the outset, know your budget limits; this in itself will shorten your camera candidate list significantly. Know also how sensitive you are for the camera price – there are applications where the camera price makes up 90% of the BOM where here the sensitivity to its price is high and there are those that the camera cost is less than 2% of the BOM with no sensitivity to price at all.

There are of course more parameters like camera and sensor longevity, integrated lenses, inherent auto-focus mechanism, service, burnt pixels, acceptance criteria and tests and so on. If it is your first time in camera selection process, make sure to have an experienced buyer or a mentor that has done this process before at your side and may well add relevant input.

System Engineer or Optical Engineer?

One more word about camera selection: who does all this beautiful work? There is a constant debate in many R&D departments as to who is in charge of camera selection (and updates if needed) for an optical system. The list of candidates is long: at the top of the list the obviously two i.e. the System Engineer and the Optical Designer, then we have the Electric Engineer, Physicist, SW Team Manager and even the Project Manager.

I will begin with an important bottom line: regardless who you think has the best fit basic skills by definition, if that person is unavailable for this task give it to someone who has the time and availability to take care of the camera selection. It is an important enough task to be assigned to someone that will consider all the angles so as not to be left to make, obliviously, a rash decision.

If we look closely at the parameters and considerations listed in this post, out of the long list of possible candidates only the System Engineer and the Optical Designer are the functions that may have the skill-set needed to make a conscious decision, where the System Engineer will have to have some knowledge in optics or the Optical Designer will have to possess some systematic skills. If there is a function such as Optics System Engineer, that person would be perfect for the task, otherwise, the best option would be to have one of these two functions as the owner of the task while the other serving as his second in all technical terms.

That’s it for now. For some unknown reason, I thought that this post would be a short one, but once I started it was clear that camera selection is a complex and important process with many ins and outs and the list and explanations grew longer and longer ![]() . I hope you find the process and tips here useful for your next camera endeavor.

. I hope you find the process and tips here useful for your next camera endeavor.